So a few weeks ago i was upgrading a 2016 Cluster to 2019. And suddenly the VMM service crashed out of nowhere. I noticed the client had tried to change a VM by increasing the size of the disk. And it failed by loosing connection to the VMM server.

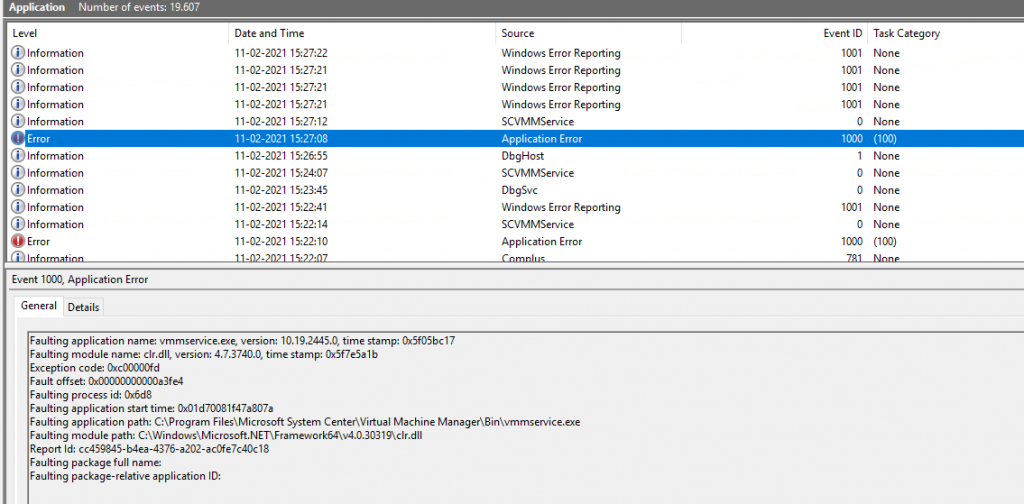

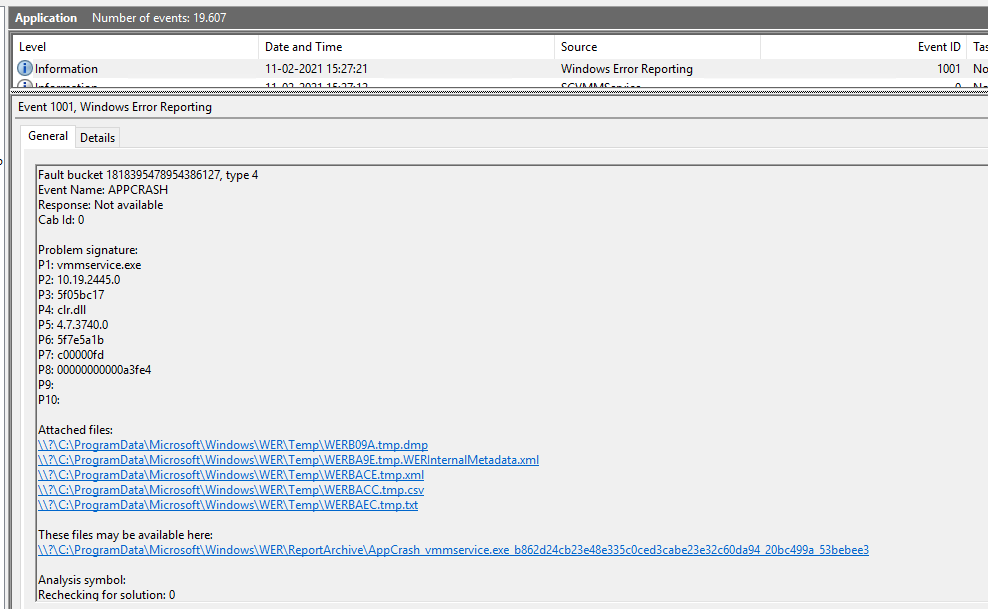

Going into the VMM server and looking at the Application log i could see a lot of information messages and a error.

Faulting application name: vmmservice.exe, version: 10.19.2445.0, time stamp: 0x5f05bc17

Faulting module name: clr.dll, version: 4.7.3740.0, time stamp: 0x5f7e5a1b

Exception code: 0xc00000fd

Fault offset: 0x00000000000a3fe4

Faulting process id: 0x6d8

Faulting application start time: 0x01d70081f47a807a

Faulting application path: C:\Program Files\Microsoft System Center\Virtual Machine Manager\Bin\vmmservice.exe

Faulting module path: C:\Windows\Microsoft.NET\Framework64\v4.0.30319\clr.dll

Report Id: cc459845-b4ea-4376-a202-ac0fe7c40c18

Faulting package full name:

Faulting package-relative application ID:

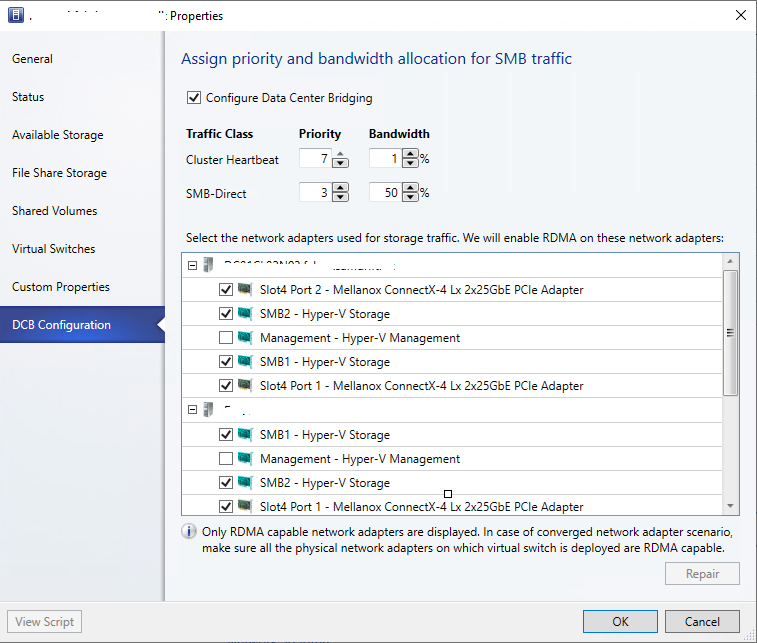

After troubleshooting this for a while a friend said this seamed familiar, and we did some testing and lo and behold it’s a new feature in VMM UR2 with DCB Configuration in 2019 and UR2. As VMM 2019 UR2 now can set DCB settings on the nic’s it has a table for this in the database. And as 2016 does not have the features needed for this was causing issues.

This might have been as i also was adding the Default DCB QOS settings for the cluster. But changing it on the 2nd S2D cluster did nothing. Im thinking it is happening when you are at the last few nodes. As it happend when i had 2 of 7 nodes left on the 1st cluster, and 1 of 3 nodes on the 2nd cluster.

But you will see this page greyed out when the cluster is in hybrid mode.

The resolution is to finish the upgrade to 2019 and upgrade the cluster fully to 2019 to get this error to go away.

The issue have been reported to VMM support and the developers are looking into this. Trace logs show calls to the DCB table is doing strange things and crashing the VMM Service. There will be no fix in VMM 2019 UR3 for this perhaps UR4. But the resolution is to upgrade all nodes in the cluster to 2019.

Hopefully this will help you to figure out what’s going on if you are upgrading to 2019 with VMM 2019 UR2. And the issue only happens when you try to do something to a VM that is on a cluster that is beeing upgraded to 2019 and that has a mix of 2016 and 2019 nodes.